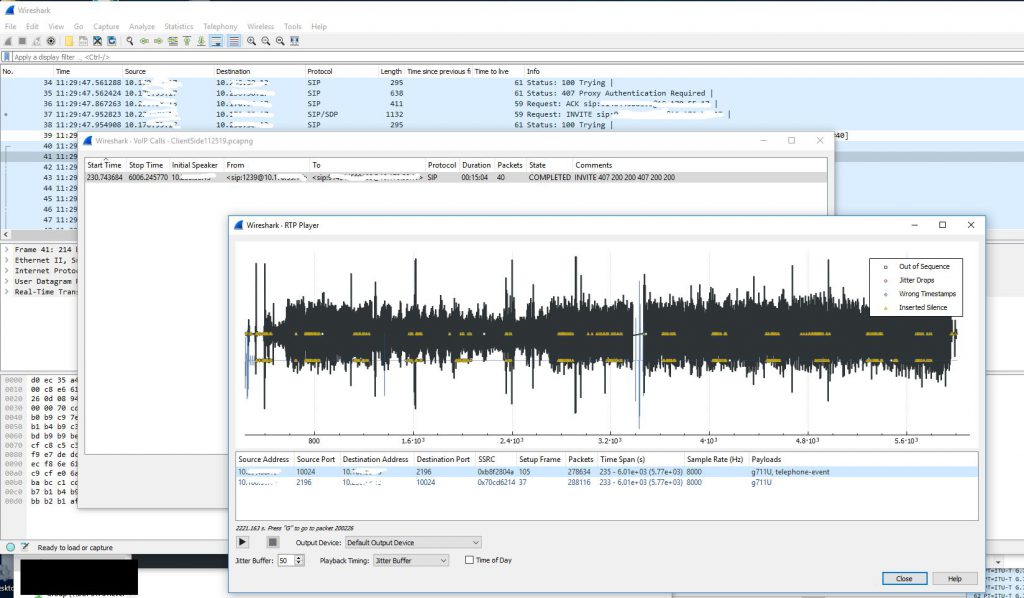

My company recently started using new Biamp TesiraFORTE devices for their newer conference rooms. I have little experience with VoIP (besides my fun with Google Voice and GVSIP) or these types of devices, but I was asked to assist in diagnosing a strange issue where audio going from the Biamp device to the Avaya gateway would randomly cut out for 1-3 minutes. Audio going from the gateway to the device would continue to work during this drop. I started by looking at a Wireshark capture of a span port of the Biamp device. This trace initially looked fine to me. I was able to view the RTP traffic and use the RTP player (Telephony -> VoIP Calls). During the time of drop there was no loss of audio.

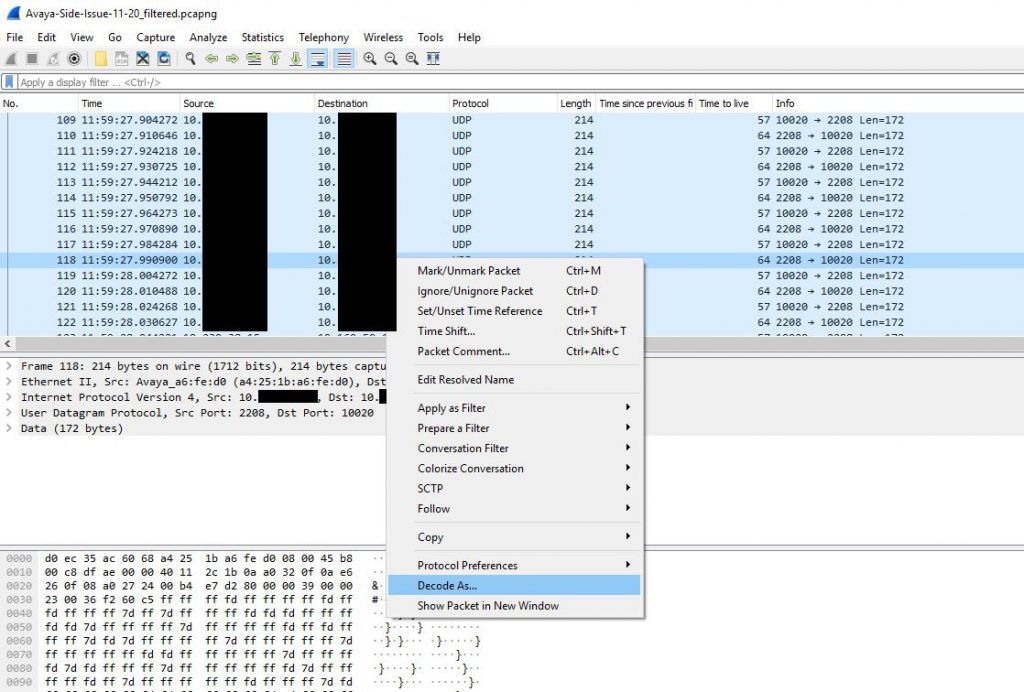

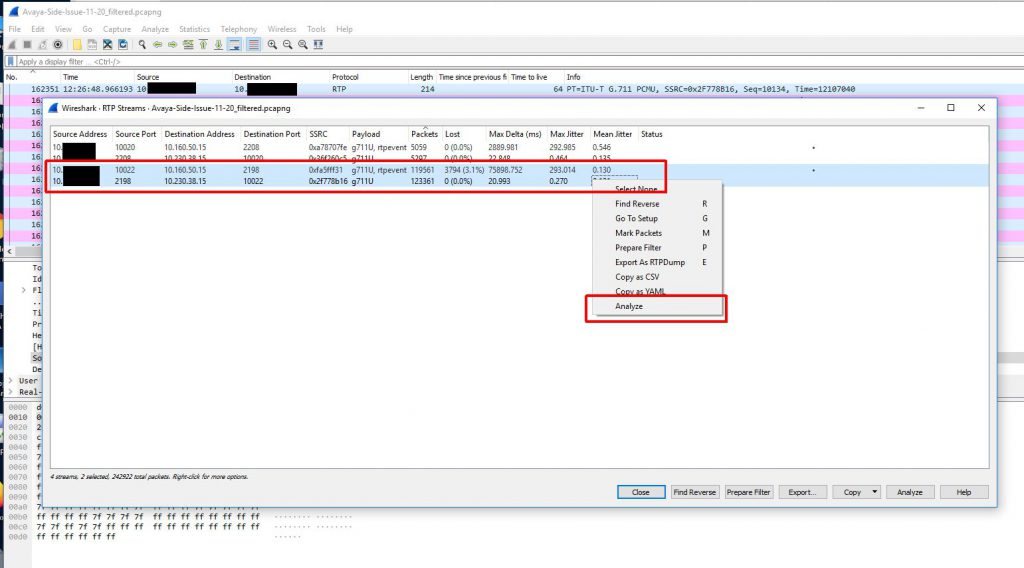

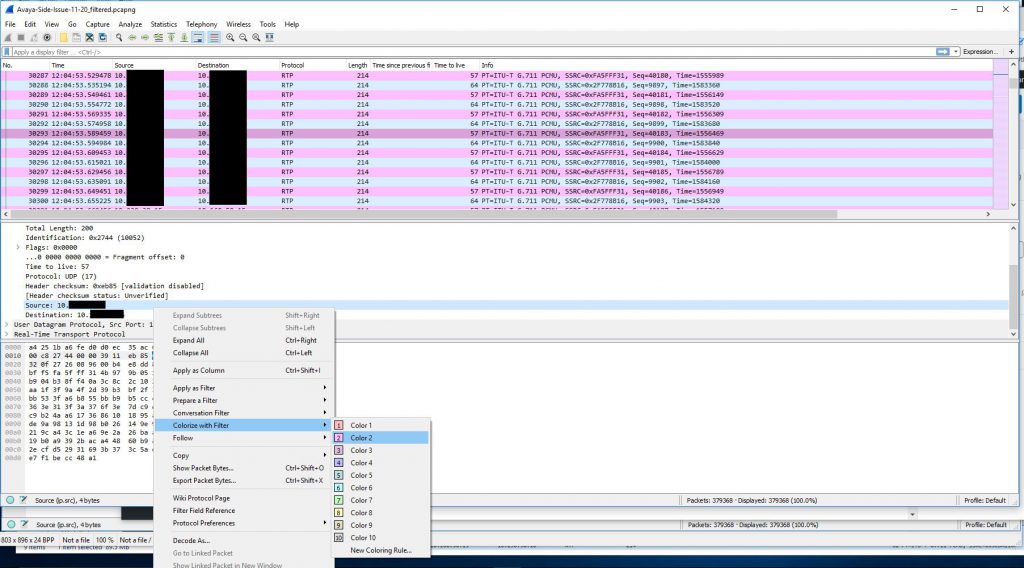

The next step was to get a trace of the other side involved. To do this we created a span of the interface on the switch that the gateway was sitting on. We then ran a packet capture of that, but using a capture filter to reduce the size of the capture since many other devices (mainly phones) were communicating with this gateway. We just used the filter ‘host <IP address of Biamp device>’ as the capture filter. I colorized traffic Biamp -> gateway traffic in this capture to make it easier to read. I also had to decode the traffic into RTP since the gateway traffic didn’t contain the initial SIP handshake.

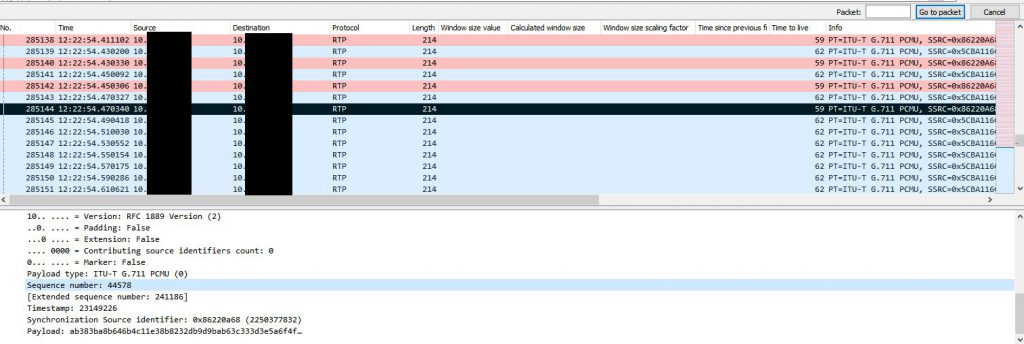

After decoding the raw UDP traffic into RTP traffic and colorizing the Biamp -> gateway traffic we are left with a nice back and forth to look at.

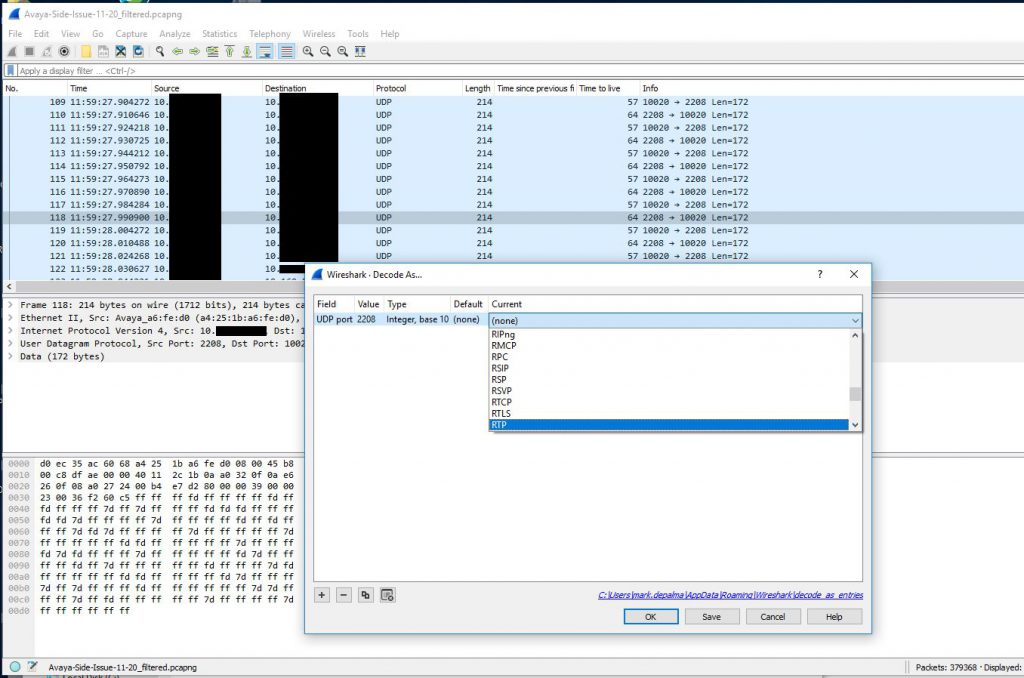

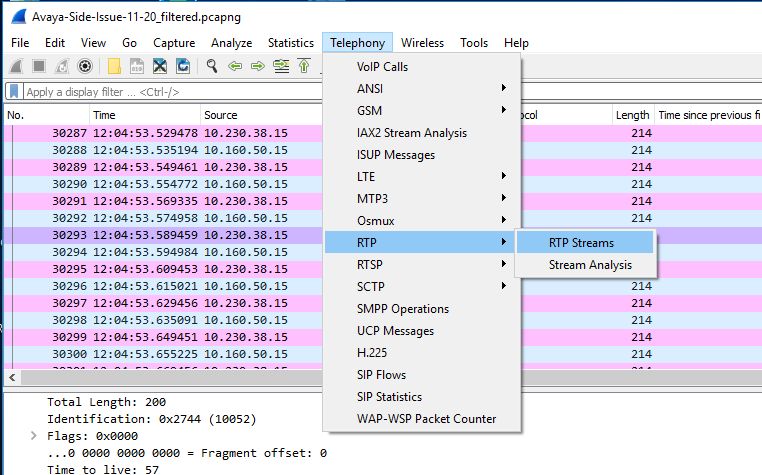

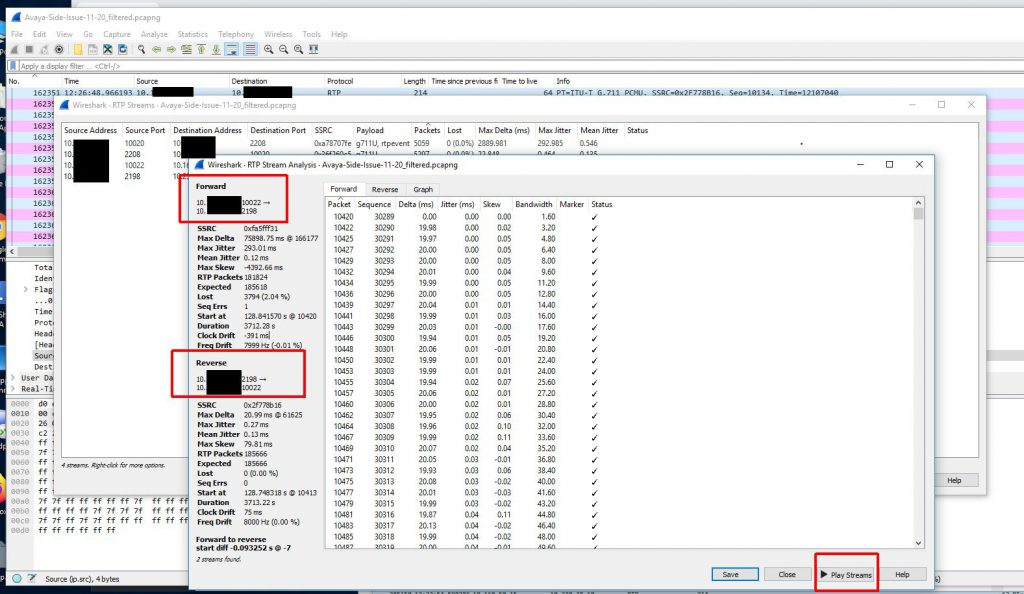

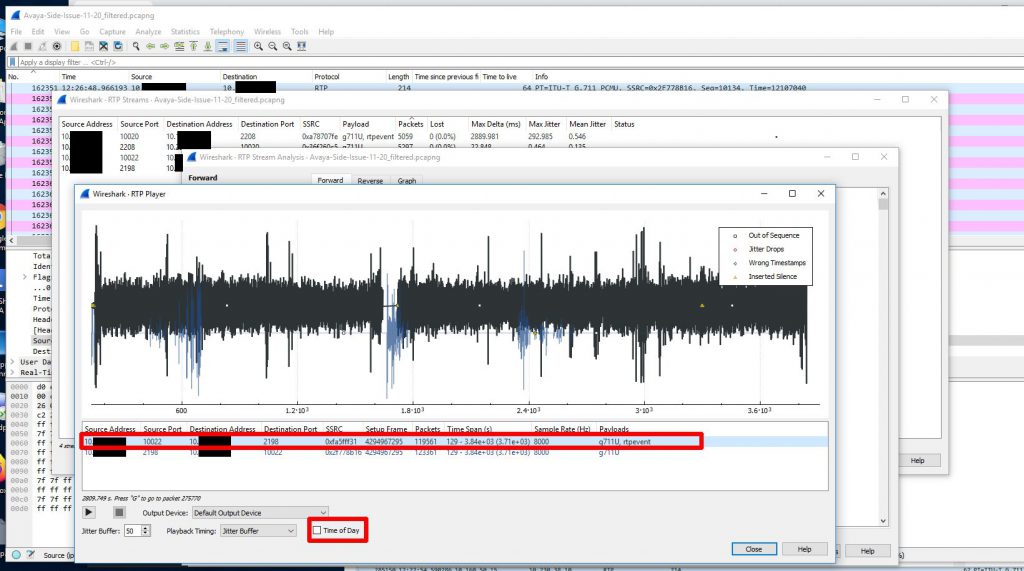

Because we didn’t capture the entire SIP handshake (the SIP gateway actually being dialed is another gateway in a different datacenter and that SIP gateway is actually handing off the call to a local gateway in the same building as the Biamp device) we need to reconstruct the RTP streams to be able to view them on a graph and play them back.

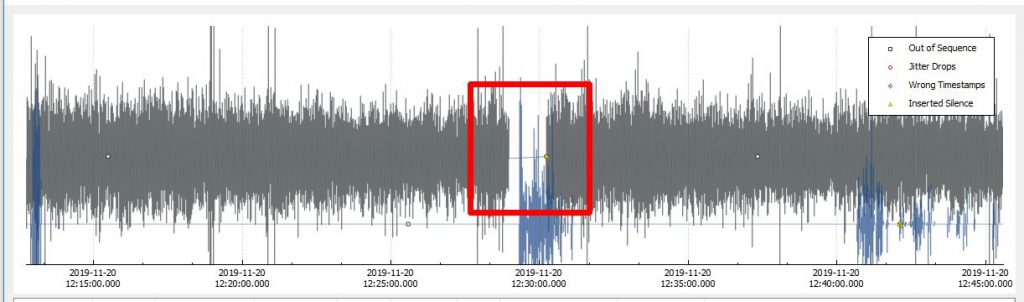

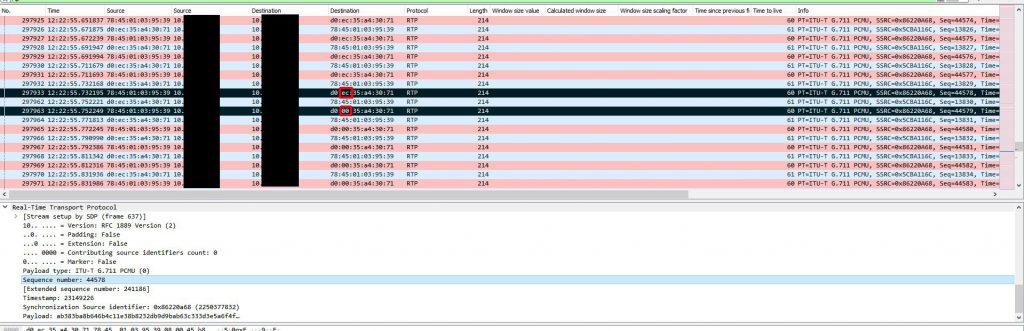

Now that we have a nice graph to look at and audio to look at we can hone in on the time of the audio loss which was about 12:26PM. When we do this we can clearly see a loss of sound and traffic at that time.

Now that we have this we can confirm that traffic isn’t actually getting to the gateway and it is not a problem with the gateway. This means somewhere between the first switch and the last switch in the path the traffic is being lost. The next step was to create a span of the trunk leaving the first switch (the switch that the Biamp device was sitting on). We actually saw the same loss of outbound traffic here as well. When the Biamp devices were installed they were actually hard set with a 100mb/full duplex configuration because they supposedly weren’t negotiating correctly and were negotiating to 10mb/half duplex. I decided we should work on that because hard setting like this can actually hide interface drops. After resolving this issue we realized the negotiation issue was actually a cosmetic issue in the configuration GUI. We upgraded firmware on the device to resolve this issue.

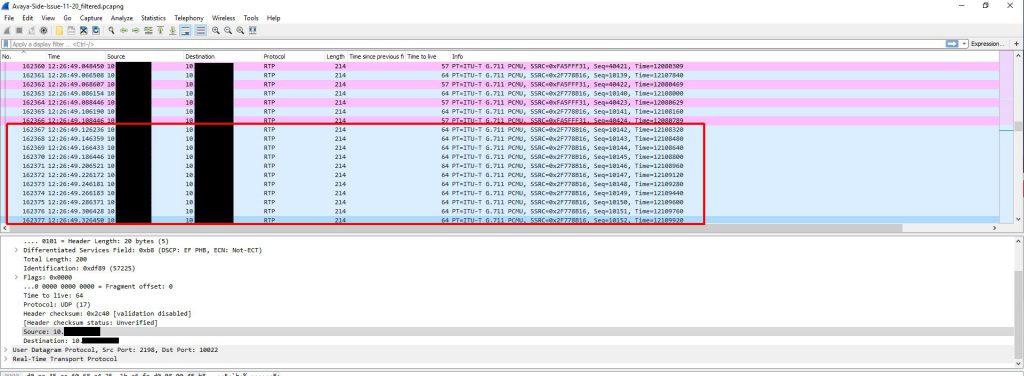

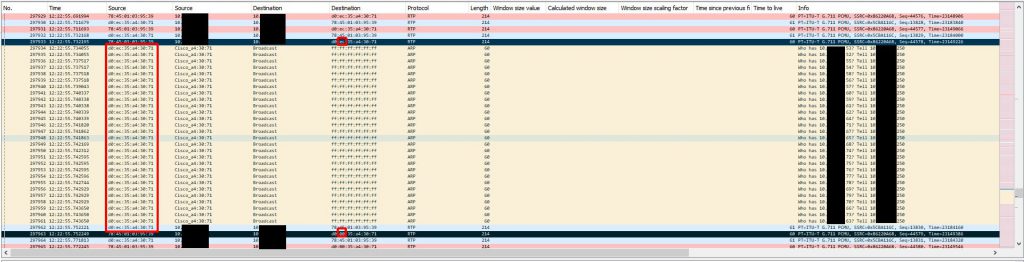

At this point I was a little baffled and had to retrace my steps since this wasn’t making any sense. To do this I took the last received RTP packet before an instance of audio loss on the gateway side capture and made note of the RTP sequence number. I used this sequence number to locate the outgoing packet on the Biamp device capture. I then marked this packet and marked the very next outgoing packet and started comparing them. This is when it got interesting. The destination MAC address changed between the two packets and remained that way for the duration of the audio loss. What made it even more interesting is the only thing that changed in the MAC address was the second byte which went from EC to 00. This MAC address was the address of the VLAN SVI (gateway). At this point I created new columns that included MAC addresses in my Wireshark view.

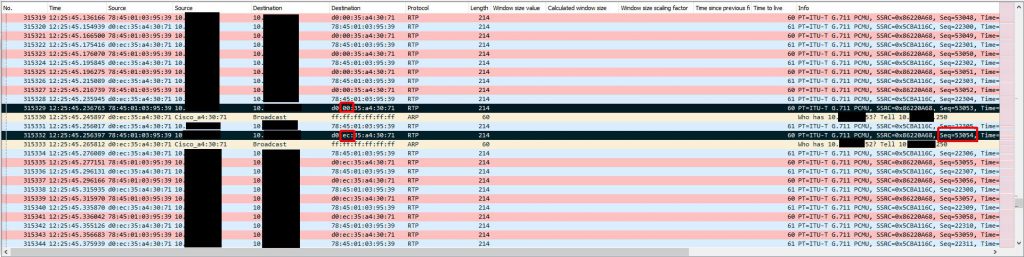

To figure out what could have caused this let’s keep this first marked packet highlighted and remove our filter (I was filtering down to SIP + RTP traffic in most of these screenshots) to see all traffic on the Biamp device span. The ONLY traffic that is visible to the Biamp device in between the last ‘good’ RTP packet and the first ‘bad’ RTP packet is a series of ARP request broadcasts from the switch. These ARP requests were normal requests looking for who had a series of IP addresses. It seems that when the Biamp device sees a number of these ARP request broadcasts it relearns the switch’s MAC address incorrectly or these APR request broadcasts somehow trigger a software bug that essentially poisons the Biamp device’s ARP cache by causing that second byte to go from EC to 00.

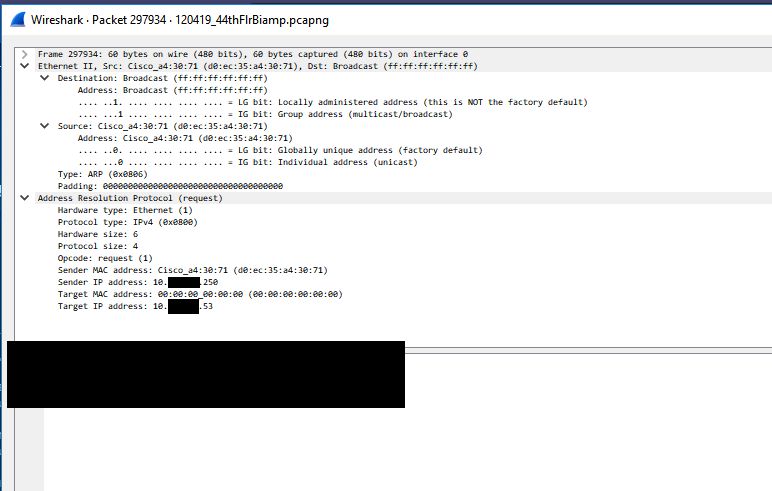

Even though it is normal and shouldn’t cause any issues we traced the reason for these ARP requests back to a network scan happening on this subnet at the time. None of these IP addresses are valid addresses and network scan is what triggered the switches to start searching for these addresses via ARP requests. Below is one of the ARP request packets. There is nothing in this packet that references the ‘bad’ switch MAC address with the second byte having 00 instead of EC.

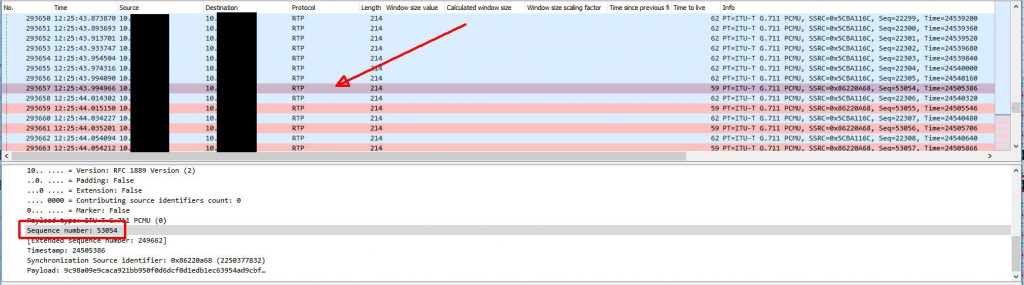

The next thing to look at is what actually causes the audio to be restored after a few minutes. Below is the same trace, but later on when the audio loss ends. The first marked (black) packet is the last ‘bad’ RTP packet and the last marked (black) packet is the first ‘good’ RTP packet when audio is restored. With no filter applied we can see that the ONLY thing happening between these two packets is ANOTHER ARP request broadcast. This ARP request seems to cause the Biamp device to re-learn the switch’s MAC address properly which restores RTP traffic delivery. The first ‘good’ RTP packet is the RTP packet with sequence number 53054. We can use the capture on the gateway side to find this packet.

With this new knowledge we were able to reproduce the issue on demand by scanning 20-30 non-existent IPs on the subnet which triggered the ARP requests. We could then restore audio by doing the same scan. Sometimes it would take a few extra scans to trigger the behavior.

We handed all of this information off to Biamp and they are still investigating the issue. When they come back with more information and/or a resolution I will update this thread. An important lesson here is to pay attention to the details. I technically had all the data I needed to figure out the issue in the original capture on the first day I was involved, but I wasn’t forced to look more closely until I looked at many other things.

UPDATE (2/7/20): Biamp eventually was able to reproduce the issue in their lab after we handed over the findings. They have since released a firmware update (3.14.1) that resolves this issue. The fix is listed as ‘Fixed issue where unsolicited ARPs impact the VoIP ARP table‘.